AI In Software Testing

|

|

The digital world has seen an exponential increase in the frequency of software releases over the past few years. It escalates the need for quicker, more efficient, and stable testing processes. The pressure to deliver flawless software is at an all-time high, and there’s universal agreement that test automation, even when partially implemented, can significantly alleviate this strain.

Nevertheless, a common issue most companies face is that their test coverage is not as comprehensive as they’d prefer. Why is this so? The answer typically lies in the inefficiencies embedded within most of the prevailing test automation frameworks. These can manifest as:

- A complex initial setup process that takes considerable time and resources

- Intricate test implementation procedures that require extensive coding skills and deep technical knowledge

- Tedious test maintenance. Which is time-consuming, leading to inefficiencies and delays

Many people would say: “Well, this is just the nature of it. You can’t build test automation and not break a sweat.”

But what if you actually can?

What is AI Testing?

AI-powered testing utilizes machine learning, natural language processing, and advanced data analytics to improve test accuracy, automate repetitive tasks, and even predict issues before they become critical. It showcases the potential to uplift traditional approaches to software testing. It offers a range of benefits, including:

- Automatically generating test cases, effectively reducing the time required for test creation

- Providing assistance during test creation, making the process simpler and more intuitive

- Improving test stability, thus reducing the presence of erroneous results

- Detecting elements on the screen, thereby aiding in more accurate test execution

- Automatically identifying issues, helping in proactive problem resolution, and enhancing the software’s quality

- Using AI to test AI, i.e., AI agents help you test LLMs, AI features, chatbots, and more

Historically, there has been some level of disconnection between manual and automation QA personnel within a team. However, this disconnect diminishes when everyone on the team is equipped to handle both manual and automation aspects of QA. This unified approach makes sure that all team members can fully participate in the quality assurance process from inception to completion. Does this signify that QA roles are on the brink of becoming obsolete due to automation? We strongly believe this is not the case. There is no concrete evidence pointing towards the elimination of QA roles in the foreseeable future. However, what we foresee is a metamorphosis in how QA teams function.

We envision QA teams becoming more robust and empowered in this latest testing paradigm. AI improves their capacity to deliver superior-quality software faster and more efficiently. This is not just about adopting new tools; it’s about having a new mindset and transforming the very essence of QA practice.

Types of AI Testing

Here are the key testing types where AI can help based on the purpose, methodology, and level of AI involvement. Also, how we can test the AI-based systems using these intelligent test automation tools:

1. Functional Testing

Functional testing verifies that the software behaves as expected based on given inputs.

- Verifies output accuracy based on input for the AI system or a normal software application.

- We test that the system meets the expected requirements.

- For example, an AI chatbot can be tested to see if it responds correctly to user queries.

2. Performance Testing

- Tests the system’s speed, responsiveness, and stability under varying workloads.

- One example is load testing an AI-powered recommendation engine under high traffic.

3. Security Testing

Security testing identifies vulnerabilities that could be exploited by attackers.

- Identifies vulnerabilities in the AI model and software system.

- By analyzing patterns in past breaches, AI can predict and mitigate future security risks.

- Testing for adversarial attacks that could manipulate AI predictions.

4. Bias and Fairness Testing

Tests that AI models do not exhibit unfair biases are important for ethical applications.

- AI algorithms review training data and model outputs to identify biased patterns.

- One example is checking if an AI hiring tool discriminates based on gender or race.

5. Explainability and Interpretability Testing

It is important that AI decisions are transparent and understandable.

- Techniques help to understand how decisions are made.

- This is especially critical in sectors like healthcare, where understanding an AI model’s reasoning is paramount.

- SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) techniques can be used to interpret model predictions.

6. Data Testing

Quality data is the foundation of any AI system, therefore:

- Data should be tested for training data quality, completeness, and correctness.

- For example, validating dataset integrity for an AI fraud detection system.

7. Regression Testing

Regression testing checks that updates or changes do not break existing functionality.

- AI can prioritize test cases based on historical data and recent code changes.

- Test that AI model updates or retraining do not introduce unexpected issues, and previous functionalities still work.

8. Adversarial Testing

This involves exposing AI models to malicious inputs to test their robustness.

- Test AI models with adversarial inputs to test their behavior.

- For example, distorted images can be tested in an AI vision system to check if they are misclassified.

9. Model Drift Testing

Model drift occurs when the performance of an AI system degrades over time due to evolving data patterns.

- AI tools continuously track model performance, identifying drift as soon as it occurs.

- One example is to detect accuracy drops in a credit-scoring AI due to shifting economic conditions.

10. Ethical and Compliance Testing

Testing adherence to industry regulations and ethical standards is vital, especially for AI systems handling sensitive data.

- Test that AI meets industry regulations and ethical guidelines.

- One classic example is testing a medical AI system against HIPAA and GDPR compliance.

11. Autonomous Testing (AI for AI Testing)

AI not only gets tested but also tests other systems with the support of AI agents.

- Uses AI-driven tools to automate testing processes.

- Example: Using testRigor to automate tests for AI applications, web, mobile, desktop, and API.

What is the Role of AI in Software Testing?

1. Intelligent Test Case Generation

- AI automatically generates test cases based on user behavior or app/test description or specs.

- Uses historical data to identify critical areas for testing and prioritizes high-risk test cases. So, essential scenarios are tested first, boosting efficiency.

- Reduces test coverage gaps by predicting potential issues.

2. Test Automation Enhancement

- AI-driven test automation tools, like testRigor, allow for automated test script generation and execution.

- Self-healing tests adjust to UI changes, reducing maintenance efforts. AI adapts to UI changes, preventing script failures.

- AI can generate test scripts in plain English, making automation accessible to non-technical users.

3. Test Execution Optimization

- AI speeds up test execution by prioritizing critical test cases. Saves time and resources by running only essential tests.

- Parallel execution across multiple environments reduces test execution time.

- AI identifies redundant tests and eliminates unnecessary executions.

4. Defect Prediction and Root Cause Analysis

- AI predicts where defects are most likely to occur based on historical test data.

- Identifies patterns in defects, helping developers fix issues faster.

- Speeds up debugging by pinpointing exact root causes in detailed plain English.

Here is an example: AI finds that most defects happen in the checkout pages of an e-commerce app. So, the testing efforts are focused on payment flow stability.

5. Visual and UI Testing

- AI can compare images and detect visual discrepancies across different devices through visual testing capabilities.

- Helps achieve UI consistency and accessibility.

- AI-powered tools recognize dynamic elements and validate layouts.

6. NLP-Based Test Automation (Plain English Scripting)

- AI allows natural language test writing, making automation easy for non-coders.

- Eliminates the need to know complex scripting languages.

- AI translates human-readable instructions into executable tests.

enter "John" into "First Name" enter "Doe" into "Last Name" click "Register" check that page contains "Registration complete"

7. AI in Performance and Load Testing

- AI simulates real-world user behavior to test system performance under load.

- Predicts bottlenecks and provides optimization suggestions.

- Helps in auto-scaling applications based on traffic patterns.

For example: AI detects that a server slows down when 500+ users log in at once. Hence, auto-scaling suggestions prevent crashes.

8. AI in Security Testing

- AI detects vulnerabilities and prevents potential cyberattacks. Read more about Cybersecurity Testing.

- Scans code for security loopholes automatically.

- AI-driven penetration testing finds weak points in APIs and applications.

For example, AI detects SQL injection vulnerability in an e-commerce website’s login form. It prevents data breaches before deployment.

9. Continuous Testing in DevOps and CI/CD

- AI integrates with DevOps pipelines for real-time testing.

- Automates test execution after every code change.

- AI speeds up feedback loops and enables faster releases.

So, the process is: Developer pushes new code → AI triggers automatic tests → Only stable builds move forward.

10. AI in Exploratory Testing

- AI mimics human testers to explore applications dynamically.

- Identifies edge cases that traditional scripted tests miss.

- Provides information on unexpected behaviors.

Read: How to Automate Exploratory Testing with AI in testRigor.

AI Software Testing vs. Manual Software Testing

During manual testing, human testers execute test cases without the aid of any automation tools, verifying software functionality by interacting with the application directly. While AI-powered software testing uses artificial intelligence tools to automate test execution, generate test cases, analyze results, and self-heal scripts.

Let us compare them based on various aspects of testing.

Speed and Efficiency

Manual Testing:

- Slow and time-consuming.

- Requires significant human effort.

- Good for exploratory, usability, and ad-hoc testing.

AI Testing:

- Fast execution, can run thousands of tests in parallel.

- AI optimizes tests, reducing redundancy.

- Automated learning helps improve efficiency over time.

Best Choice: AI testing (speed and scalability)

Accuracy and Reliability

Manual Testing:

- Can have human errors (missed bugs, inconsistent test execution).

- Variability in test results due to fatigue or bias.

- Useful for subjective assessments (UI/UX, user behavior).

AI Testing:

- AI eliminates human errors, and brings in consistent test execution.

- Uses historical data to detect patterns and predict defects.

- Self-healing test scripts adapt to UI changes.

Best Choice: AI testing (higher accuracy, fewer errors)

Test Coverage

Manual Testing:

- Limited coverage due to time and resource constraints.

- Can cover real-world scenarios that automation might miss.

AI Testing:

- Can generate and execute thousands of test cases in minutes.

- AI optimizes test coverage by identifying high-risk areas.

- Includes performance, security, regression, and exploratory testing.

Best Choice: AI testing (wider test coverage)

Cost and Resource

Manual Testing:

- It could result in high costs (time-wise, too) due to the requirement of skilled human testers.

- Labor-intensive for large projects.

- It is a good choice for small projects with less automation needs.

AI Testing:

- Reduces long-term costs by minimizing manual effort.

- AI-based tools like testRigor automate test creation and execution.

- Can run 24/7 without human intervention.

Best Choice: AI testing (cost-effective in the long run)

Test Maintenance

Manual Testing:

- Requires repeated effort for every software update.

- Test cases need to be manually updated for UI/UX changes.

- Good for one-time, short-term projects.

AI Testing:

- Self-healing tests automatically adjust to application changes.

- Reduces script maintenance efforts.

- AI auto-updates test cases for evolving applications.

Best Choice: AI testing (lower maintenance efforts)

Scalability

Manual Testing:

- Hard to scale across multiple platforms and test cases.

- Requires additional testers for large-scale projects.

AI Testing:

- Scales effortlessly with cloud-based execution.

- Can run parallel tests on multiple devices and browsers.

- Suitable for large-scale enterprise applications.

Best Choice: AI testing (highly scalable). Read more about Test Scalability.

Best Use Cases: Manual Testing vs. AI Testing

| Scenario | Best Choice |

|---|---|

| Usability and UX Testing | Manual |

| Exploratory Testing | Manual |

| Automated Regression Testing | AI |

| Performance and Load Testing | AI |

| Security Testing | AI |

| Repetitive Test Execution | AI |

| AI-Powered Applications | AI |

Comparison Table: Manual vs. AI Testing

| Feature | Manual Testing | AI Testing |

|---|---|---|

| Speed | Slow | Fast |

| Accuracy | Prone to errors | High accuracy |

| Coverage | Limited | High |

| Cost | Expensive (long-term) | Cost-effective |

| Maintenance | High effort | Low (self-healing) |

| Scalability | Limited | Highly scalable |

When to Use What?

- Manual Testing: Best for UI/UX, exploratory, ad-hoc, and small-scale testing.

- AI Testing: Best for regression, automation, large-scale, performance, security, and predictive analytics.

Why do We Need AI in Software Test Automation?

Now, as we have seen the role and types of AI testing. Let us understand why do we need AI in software testing. As we dig deeper into the intricacies of traditional test automation, it reveals certain inherent challenges. These issues often pose significant hurdles in its efficient implementation. Here, we will explore these challenges and demonstrate how the advent of AI in software testing has the potential to mitigate them.

First challenge: Traditional test automation demands highly skilled engineers who excel not only in the technical aspects of setting up the test framework but also in crafting automated tests. Conventional automation frameworks are often rigid when it comes to their structure and architecture. Essentially, while there are myriad ways to construct tests that yield pass or fail results, only a few of these methods guarantee reliable tests that accurately validate the right aspects of a software application.

Second challenge: It arises from the design perspective of the traditional automation framework. Traditional automated tests necessitate a degree of knowledge about the underlying implementation details of the software under test. As a result, tests are usually constructed from an engineer’s perspective rather than from an end-user’s viewpoint. This means that elements are often identified by their technical identifiers, such as IDs or XPaths, rather than their contextual usage or appearance, as perceived by a user.

Third challenge: Traditional automation tests suffer from complexity and relatively low readability. Consequently, once automated tests are set in place, they are seldom revisited to reassess their relevance, accuracy, or effectiveness. This could lead to outdated or inefficient tests persisting in the test suite, reducing the efficiency of the testing process.

The integration of AI into software testing has opened up new avenues to address these longstanding issues. By using AI, it is now possible to significantly simplify the test creation process, bridge the gap between the perspectives of end-users and engineers, and promote the regular reassessment of automated tests to have their continued relevance and effectiveness.

You can have a look at this video to find out how generative AI has changed the way tests are created. Just plain English, and you are good to go.

How to Use Artificial Intelligence in Software Testing?

Let us review the steps that you can take to use AI in your software testing processes:

Identify Areas Where AI Can Improve Testing

Before implementing AI, determine which testing areas will benefit the most, such as:

- Test case generation (reducing manual effort)

- Test execution optimization (faster test runs)

- Defect prediction and root cause analysis

- Self-healing automation (handling UI changes)

- Performance, security, and visual testing

Example: Instead of manually writing test scripts for an e-commerce site, AI tools like testRigor generate test cases automatically.

Then follow the methods of AI in automation testing as mentioned in the next section.

Methods of AI in Automation Testing

Now, you can use two methods to use AI in your test automation processes:

-

Build your own AIFor example, you are using Selenium for automation testing. You can enhance the automation by putting AI into these scripts. However, this will require enormous time and effort. Also, you need AI and Selenium experts to help you with this. And it can be an expensive ordeal. Here is a blog on how to use Selenium and AI: Selenium AI. Another article is: AI to make Jest testing smarter. These are a few disadvantages of this method:

- High initial investment – requires skilled data scientists and AI engineers.

- Longer development time – compared to ready-made AI tools.

- Complex maintenance – AI models require frequent retraining.

-

Use proprietary AI toolsThere are many AI-based test automation tools available in the market, which eases the issue of building your own AI in testing. These tools come packed with self-healing, AI-powered test generation, detailed reports, logs, test execution videos, LLM and chatbot testing, visual testing, accessibility testing, and other powerful capabilities. These are the advantages:

- Quick implementation – no need to build AI models from scratch.

- Lower maintenance – AI adapts automatically to application changes.

- Works out-of-the-box – integrates easily with existing test frameworks.

Where Can Artificial Intelligence Software Testing Help?

- Regression testing

- Smoke and sanity testing

- Cross-browser and cross-platform testing

- Applications with frequent UI updates

- Web and mobile automation

- Large applications needing extensive test coverage

- AI-powered exploratory testing

- Responsive design testing

- Multi-device UI validation

- Large-scale applications (e.g., e-commerce, banking, SaaS)

- Cloud-based performance testing

- Continuous security testing in CI/CD

- API security validation

- Reducing debugging time

- Optimizing defect triaging

- Agile and DevOps teams

- Continuous testing in CI/CD

- Finding hidden bugs

- Testing new features without predefined cases

- Teams without coding expertise

- Fast test automation with minimal effort

Where is Artificial Intelligence Software Testing Less Helpful?

- Evaluating user interface intuitiveness

- Checking user satisfaction

- Unscripted exploratory testing

- Testing unknown, new features

- Testing compliance with ethical guidelines

- Legal and policy-based testing

- Testing apps built on low-code app development platforms

- Testing self-learning AI models

- Evaluating AI bias and fairness

- Startups with one-time testing needs

- Projects with limited automation scope

Benefits of AI in Software Testing

1. Faster Test Execution and Reduced Testing Time

- AI automates test execution, running thousands of tests in minutes.

- Supports parallel testing across multiple devices and platforms.

- Reduces time-to-market for software releases.

2. Increased Test Accuracy and Reliability

- Eliminates human errors caused by fatigue or oversight.

- Consistent test execution across different environments.

3. Self-Healing Test Automation (Less Maintenance)

- AI updates test scripts automatically when UI elements change.

- Adaptable to UI updates without manual intervention. Thus, reduces the effort spent fixing broken automation scripts.

4. AI-Driven Test Case Generation

- AI analyzes application behavior and auto-generates test cases.

- Covers edge cases and complex scenarios that humans might overlook.

- Reduces test coverage gaps and increases overall quality.

5. Optimized Test Execution and Smart Prioritization

- AI prioritizes critical test cases based on historical defect data and reduces execution time.

- Eliminates redundant test cases to speed up release cycles.

6. Enhanced Visual and UI Testing

- AI detects UI inconsistencies across different screen sizes and resolutions. Goes beyond pixel-based comparisons.

- Identifies broken layouts, misaligned elements, and incorrect fonts.

7. Early Bug Detection and Predictive Analysis

- AI analyzes past test results to predict where future bugs are likely to occur.

- Helps developers fix defects before they impact users.

- Reduces the cost of fixing defects in later development stages.

8. AI-Powered Performance and Load Testing

- Simulates real-world user behavior under different loads. Identifies bottlenecks and performance issues.

- Predicts system failures and suggests auto-scaling solutions.

9. AI in Security Testing (Vulnerability Detection)

- AI scans code for security vulnerabilities before deployment.

- Runs automated penetration tests to prevent cyberattacks and detects SQL injection, XSS, and authentication flaws.

10. AI for Continuous Testing in DevOps and CI/CD

- AI integrates with CI/CD pipelines to automate testing at every stage.

- It provides real-time defect feedback, reduces deployment risks, and lets only stable builds move forward to production.

11. AI in Exploratory Testing (Unscripted Bug Hunting)

- AI navigates applications dynamically, simulating human exploratory testing.

- Finds unexpected issues beyond predefined test cases.

12. AI-Enabled Test Automation for Non-Technical Users

- AI enables plain English test automation, removing the need for coding.

- Makes test automation accessible for business users and non-developers.

Top Pros of Using AI in QA Automation

| Benefit | Impact |

|---|---|

| Faster Test Execution | Reduces test execution time from months to hours |

| Higher Accuracy | Eliminates human errors and keeps reliable test results |

| Self-Healing Tests | Adapts to UI changes without manual script updates |

| Smarter Test Coverage | AI generates edge cases that humans might miss |

| Optimized Test Execution | Prioritizes high-risk areas for efficient testing |

| Early Bug Detection | Finds defects before they reach production |

| Scalability | Runs parallel tests across multiple platforms |

| Security Improvements | Detects vulnerabilities before attackers do |

| No-Code Test Automation | Enables business users and everyone on the team to automate tests |

Top Cons of Using AI in QA Automation

| AI Struggles With … | Why? | Alternative |

|---|---|---|

| High Setup Time | Scripting required | Use proprietary AI frameworks |

| Exploratory Testing | Lacks human intuition | Combine AI with manual testing |

| Usability and UX Testing | Can’t evaluate detailed user experience | Conduct human usability testing |

| Business Logic Validation | Doesn’t understand domain knowledge | Use domain experts for testing |

| Rapidly Changing Apps | Needs retraining | Use hybrid AI + manual testing |

| False Positives/Negatives | Misinterprets results | AI + human verification |

What is the Future of AI in Software Testing?

- Fully Autonomous AI-Driven Testing (Self-Testing Software): AI learns from user interactions, continuously adapts test cases, and prioritizes defect resolution based on risk.

- AI-Powered Code Testing and Automated Debugging: AI analyzes historical bug reports, warns developers about unstable code, and provides real-time debugging suggestions.

- AI-Driven Exploratory and Unscripted Testing: AI learns from previous failures, refines testing strategies, and identifies functionality gaps that predefined tests miss.

- Smart Test Case Optimization and Risk-Based Testing: AI scans test reports, predicts unstable modules, and rearranges test execution orders in real time.

- AI-Powered UI and Visual Testing: AI intelligently compares UI elements, ensuring a seamless user experience across different devices.

- AI in Performance, Load, and Scalability Testing: AI-driven load testing bots analyze historical metrics and suggest infrastructure optimizations.

- AI-Powered Security and Threat Detection: AI scans millions of security logs, performs automated penetration tests, and prevents cyber threats in real time.

- AI in Continuous Testing and DevOps Pipelines: AI continuously scans commits, auto-triggers tests, and recommends rollback strategies for unstable builds.

Will AI Replace Manual Testers?

No, but it will enhance their roles by:

- Automating repetitive tasks

- Identifying test gaps faster

- Allowing testers to focus on exploratory, usability, and security testing

- Last but not least, strong domain knowledge would be key to testing LLMs/chatbots and other AI

The Future of AI in Testing: Key Takeaways

| Trend | Impact |

|---|---|

| Self-Testing Software | AI will create, execute, and maintain tests autonomously. |

| Self-Healing Test Scripts | AI will update automation scripts dynamically. |

| AI-Driven Debugging | AI will detect, analyze, and fix bugs automatically. |

| Smarter Test Execution | AI will optimize test coverage for efficiency. |

| AI in Security Testing | AI will prevent security breaches proactively. |

| AI in Performance Testing | AI will predict and fix scalability issues in real time. |

AI Testing Tools

1. testRigor

testRigor is a generative AI-powered intelligent no-code test automation platform. It is designed to help manual testers, business users, and developers automate web, mobile (hybrid/native), desktop, API, and database without writing code. It eliminates test flakiness, automatically adapts to UI changes, and integrates seamlessly with CI/CD workflows. testRigor’s users have been using these advanced features to boost their QA testing efficiency dramatically. They’ve already run over 100 million tests on the testrigor.com platform. This translates to millions of QA hours that were saved from being consumed by mundane, repetitive tasks. These valuable hours were instead reallocated to more strategic testing activities, leading to improved quality outcomes.

testRigor supports different types of testing, such as native desktop, web, mobile, API, visual, exploratory, AI features, accessibility testing, and many more.

Key Features of testRigor

-

Plain English Test Scripting (No-Code Testing): Write test cases in natural language, making automation accessible to non-technical users.

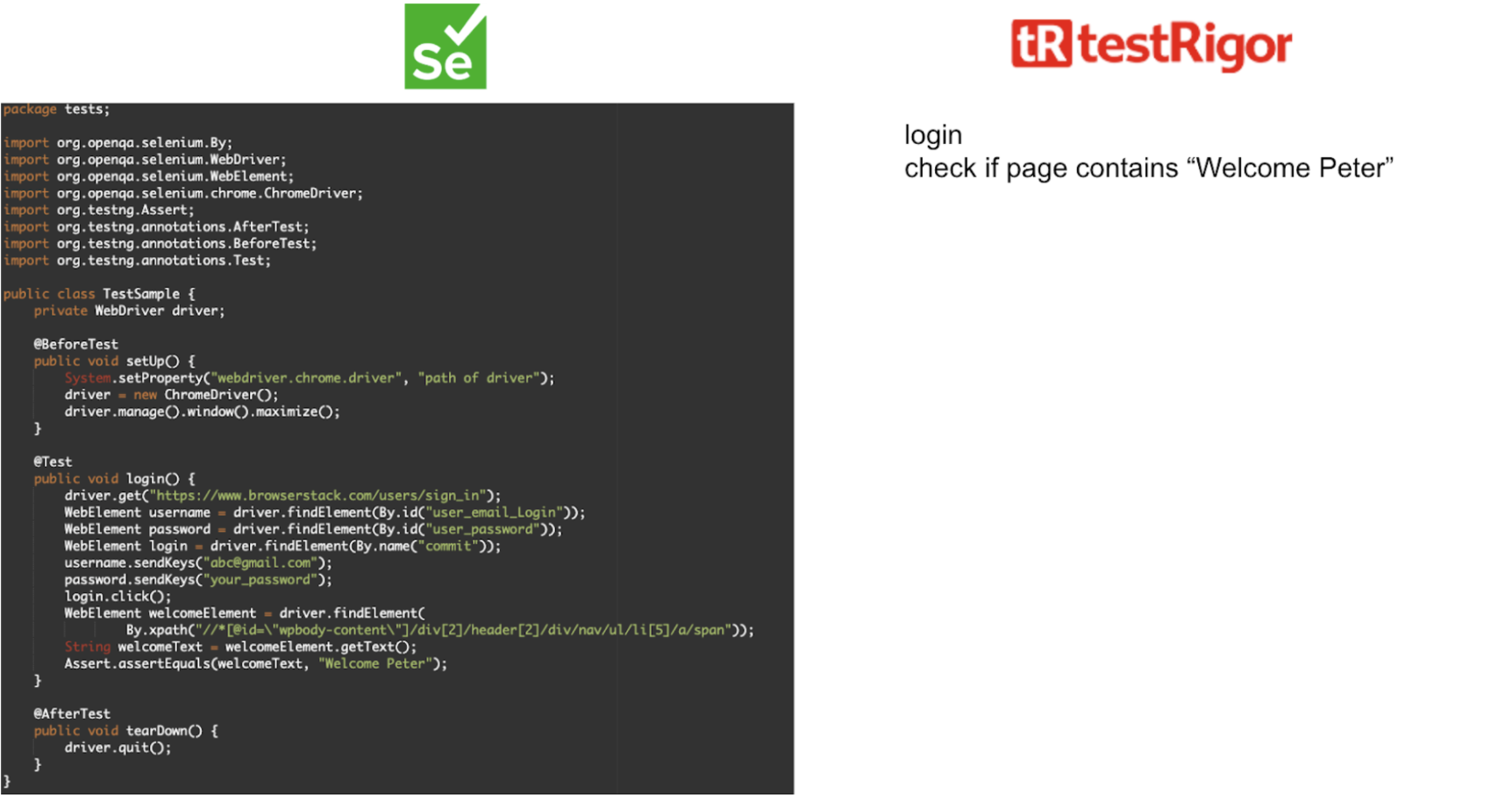

Example: Instead of writing the above complex Selenium code, testers can write:

Example: Instead of writing the above complex Selenium code, testers can write:login check if page contains "Welcome Peter"

-

Self-Healing Test Automation

- AI automatically updates test scripts when the UI element’s position or name changes on screen.

- Reduces test maintenance costs and eliminates flaky tests.

-

Comprehensive Test Coverage (Web, Mobile, API, Desktop)

- Supports cross-platform testing including web, iOS, Android, API, and desktop applications.

- Simulates real user interactions like clicking, scrolling, and typing.

-

Parallel Execution and Cloud-Based Testing

- Run tests in parallel on multiple browsers and devices.

- Supports cloud-based execution for scalability.

-

AI-Powered Test Generation

- Generates test cases based on app description, specifications, and test case description. Read: All-Inclusive Guide to Test Case Creation in testRigor.

- Follows BDD or SDD (Specification Driven Development) in the true sense, where you can use the specifications as automation test cases.

-

Integration with CI/CD and DevOps Pipelines

- Works with Jenkins, GitHub Actions, GitLab, Azure DevOps, and CircleCI.

- Enables continuous testing in Agile and DevOps environments.

-

API Testing with Natural LanguageAllows writing API tests in plain English, making it easy for everyone.

call api post "http://dummy.restapiexample.com/api/v1/create" with headers "Content-Type:application/json" and "Accept:application/json" and body "{\"name\":\"James\",\"salary\":\"123\",\"age\":\"32\"}" and get "$.data.name" and save it as "createdName" and then check that http code is 200 -

Visual and Accessibility Testing

- Detects UI inconsistencies, broken layouts, and accessibility issues.

- Achieves compliance with accessibility standards.

-

LLM and AI Feature Testing

- testRigor is an AI agent, and you can use it to test LLMs, chatbots, and more. Read: Top 10 OWASP for LLMs: How to Test?

- You can use it to test AI features such as sentiment analysis, positive/negative statement analysis, true/false statements, etc.

Who Should Use testRigor?

- Manual testers transitioning into automation (No coding required).

- Agile and DevOps teams that need fast, AI-powered test automation.

- Business users and non-technical professionals looking for easy test scripting.

- CI/CD-driven teams that need AI-powered regression testing.

Use For: Web, mobile (hybrid/native), desktop, ERP, CRM, Salesforce, API, cross-browser, UI, and end-to-end testing.

2. Selenium + AI Plugins

- No built-in AI, but it can integrate with PyTorch, TensorFlow, Healenium (self-healing).

- Supports Java, Python, JavaScript, C#, and cross-browser testing.

- Highly customizable but requires coding skills.

- Parallel execution using Selenium Grid.

Use For: Programming and AI-expert teams looking to integrate AI into open-source automation.

3. RobotFramework-AI

- Uses computer vision and deep learning for element detection.

- NLP-based keyword-driven testing.

- Supports web, mobile, API, and desktop automation.

- Integrates with AI libraries like TensorFlow, OpenCV, and Healenium.

Use For: Open-source users wanting AI-powered object recognition and self-healing.

4. Parasoft SOAtest

- Supports REST, SOAP, and GraphQL API testing.

- AI-based test case generation and security testing.

- CI/CD integration with Jenkins, GitHub, and DevOps tools.

Use For: AI-driven API and microservices testing.

5. DevAssure

- Uses AI for predictive analytics and performance monitoring.

- Scalability testing simulates high-traffic conditions.

- Works on-premise and in the cloud.

Use For: Teams focused on performance and scalability testing.

6. Auto-Playwright

- AI automatically updates test scripts when UI changes.

- Supports cross-browser testing (Chrome, Firefox, Safari, Edge).

- CI/CD integration with Jenkins and GitHub Actions.

Use For: Teams using Playwright wanting AI-powered self-healing and automation.

7. UiPath

- AI-driven object recognition reduces flaky tests.

- Codeless, drag-and-drop workflows for test automation.

- Supports web, mobile, desktop, and ERP testing (SAP, Salesforce, ServiceNow).

Use For: Enterprise users needing test automation + RPA (Robotic Process Automation).

8. Aqua ALM

- AI prioritizes high-risk test cases for efficient execution.

- Supports manual, automated, and exploratory testing.

- Integrates with Selenium, Playwright, JUnit, and Appium.

Use For: Enterprises needing AI-driven test planning, defect tracking, and compliance testing.

9. Digital.ai

- AI detects high-risk test cases and predicts defects.

- Supports mobile and web test automation.

- Works with Jenkins, GitHub, and Azure DevOps.

Use For: Teams practicing CI/CD needing AI-driven test prioritization.

10. HeadSpin

- Real-device cloud testing eliminates the need for physical devices.

- AI-driven UX monitoring, geolocation, and network performance analysis.

- Works with iOS, Android, and web apps.

Use For: Performance testing across real-world devices, networks, and geographies.

11. Neoload

- AI-assisted performance testing for web and mobile apps.

- Predicts bottlenecks and optimizes cloud scaling.

Use For: Performance and load testing.

Conclusion

The future of software testing lies in AI-human collaboration. While AI accelerates and optimizes testing, human expertise remains essential for creativity, strategic thinking, and decision-making. Companies that successfully integrate AI into their testing workflows will experience higher efficiency, improved test coverage, and faster releases.

FAQs

-

What is the role of testers in AI?Testers play a critical role in AI-driven testing and they test that AI models are accurate, fair, and reliable. Their responsibilities include training models, checking AI predictions, exploratory testing, testing AI fairness, monitoring tests, and maintaining compliance.

-

Will AI take over QA?No, AI will not take over QA but will transform it. AI improves automation and test efficiency and reduces repetitive tasks. It still requires human oversight for exploratory testing, usability and UX testing, business logic validation, compliance and security analysis, test strategy and decision-making, etc.

-

How will AI change software testing?

- AI writes, executes, and maintains test cases dynamically.

- Reduces test flakiness by adapting to UI changes automatically.

- Identifies potential bugs before they occur.

- Runs tests in parallel across multiple platforms.

- Focuses on high-risk areas first.

- Detects UI issues beyond simple pixel-based comparisons.

AI will not replace testers, but it will remove repetitive and time-consuming tasks. Testers then can focus on more complex and strategic testing areas. -

What is the role of AI in software engineering and testing?AI is an integral part of agile development, DevOps, and CI/CD. It is required for:

- Automated code testing and debugging

- Checking software performance under different conditions

- Security testing by detecting vulnerabilities

- Generating and executing test cases using AI models

- Reducing test maintenance efforts through self-healing automation

- Improving DevOps with AI-powered continuous testing

-

Can AI write test cases?Yes, AI can generate test cases automatically based on:

- Application behavior

- Specifications

- Test and app descriptions

- User interaction history

- Previous test execution results

- Defect trends and high-risk areas

-

What jobs will AI replace by 2030?Although, it is a real far-fetched timeline to predict anything. But we think AI will replace repetitive, rule-based, and high-volume QA tasks. It includes manual regression testing, basic functional testing, UI testing, test data generation, bug reporting, etc.

-

Will AI replace testers?No, AI will not replace testers entirely. Instead, it will:

- Automate repetitive tasks, allowing testers to focus on complex testing.

- Improve efficiency, reducing the need for manual test execution.

- Assist in defect detection, but still require human judgment.

- Increase test coverage, but still need exploratory testing.

-

Is software testing a dead-end job?No, software testing is evolving, not dying. It is moving from manual execution to AI-powered automation and test strategy development. The demand for test automation engineers, AI-driven testers, and QA strategists is increasing. Companies are investing in AI testing tools, making QA roles more valuable than ever.

-

What is the most QA dead-end job?Relying only on manual regression testing can limit career growth. Companies are shifting towards AI-driven test automation. QA testers who don’t learn AI, automation, or DevOps may struggle to find new opportunities.Solution: Upskill in test automation, AI-driven testing, domain knowledge, and DevOps CI/CD workflows to stay relevant.

-

What QA Area can’t be replaced by AI?Areas where AI is least effective:

- Exploratory Testing – Requires creativity and human intuition.

- Usability and UX Testing – AI can’t assess human emotions and user experience.

- Security and Compliance Testing – Needs expert judgment for ethical AI, regulations, and policies.

- Risk-Based Test Planning – AI lacks the business acumen to prioritize testing.

- Edge Case Testing – AI struggles with unpredictable, non-deterministic behavior.

-

What is the future of QA with AI?AI will not eliminate QA but will reshape it by:

- Automating repetitive testing tasks

- Bringing in accuracy and defect prediction

- Reducing test maintenance with self-healing automation

- Shifting the focus to exploratory, security, and strategic testing

QA testers who adopt AI-powered tools will be in high demand. -

Is QA still in demand?Yes, QA is in high demand, but roles are evolving.

- Companies need skilled automation testers to handle AI-powered tools.

- Cloud-based and AI testing expertise is becoming a priority.

- DevOps and Agile testing roles are growing as businesses move to CI/CD.

QA professionals who upskill in AI, automation, and DevOps will have a competitive edge. -

What is the highest salary opportunity for a QA tester today?Highest-Paying QA Roles:

- QA Architect – $120K – $180K

- AI Test Automation Engineer – $100K – $160K

- Performance Testing Engineer – $90K – $150K

- Security QA Engineer – $100K – $160K

QA salaries are increasing for those with expertise in AI, automation, and cloud-based testing. -

Is software quality assurance dying?No, QA is evolving, not dying.

- AI-powered testing is improving QA roles, not eliminating them.

- QA engineers with automation and AI expertise are in high demand.

- Cybersecurity, performance testing, and compliance QA roles are growing.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |